Denoising Diffusion Probabilistic Models

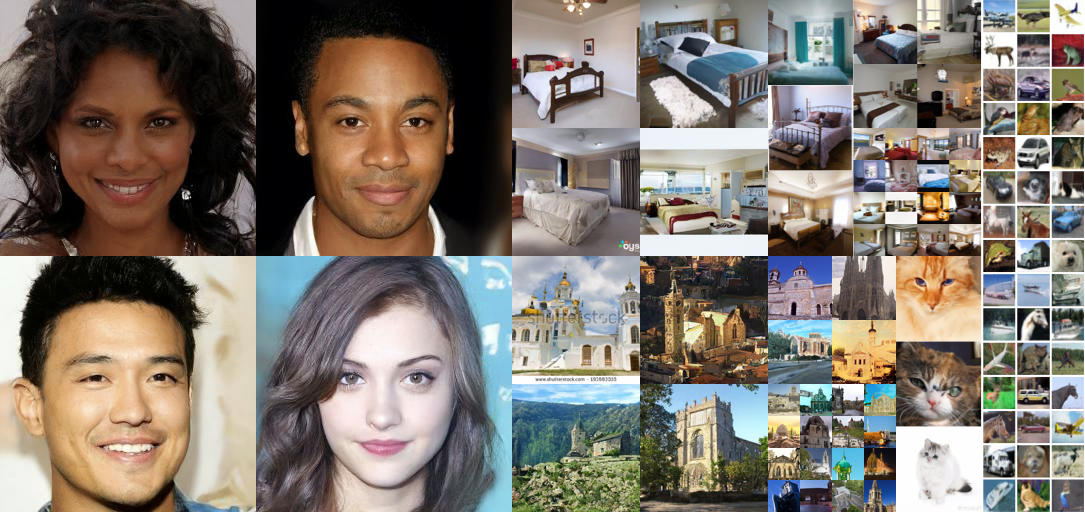

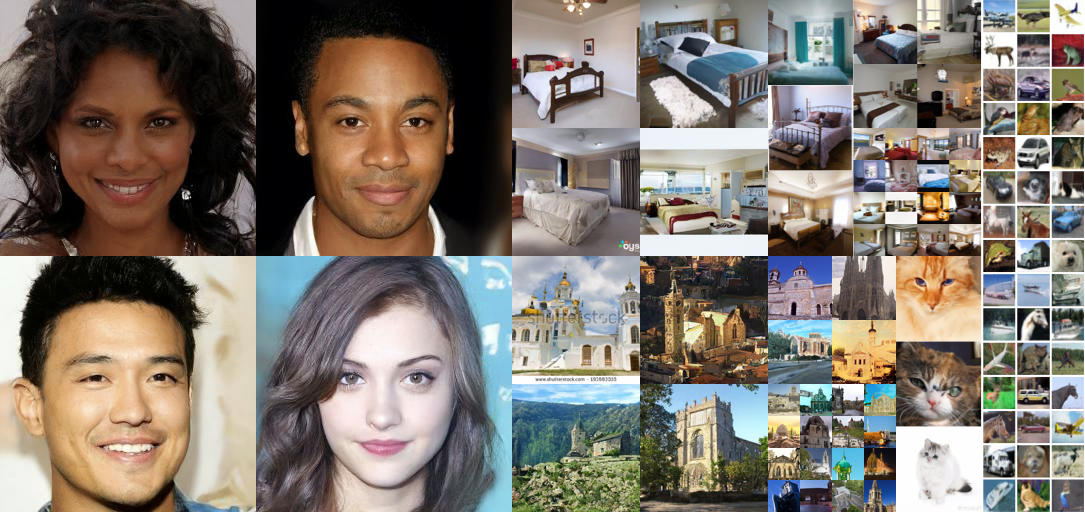

Images generated unconditionally by our probabilistic model.

Images generated unconditionally by our probabilistic model.These are not real people, places, animals or objects.

Images generated unconditionally by our probabilistic model.

Images generated unconditionally by our probabilistic model. Diffusion probabilistic models are parameterized Markov chains trained to gradually denoise data. We estimate parameters of the generative process p.

Diffusion probabilistic models are parameterized Markov chains trained to gradually denoise data. We estimate parameters of the generative process p.We present high quality image synthesis results using diffusion probabilistic models, a class of latent variable models inspired by considerations from nonequilibrium thermodynamics. Our best results are obtained by training on a weighted

variational bound designed according to a novel connection between diffusion probabilistic models and denoising score matching with Langevin dynamics, and our models naturally admit a progressive lossy decompression scheme that can

be interpreted as a generalization of autoregressive decoding. On the unconditional CIFAR10 dataset, we obtain an Inception score of 9.46 and a state-of-the-art FID score of 3.17. On 256x256 LSUN, we obtain sample quality similar to

ProgressiveGAN.

We train our models on 256x256 CelebA-HQ and LSUN datasets.

CelebA-HQ 256x256 samples.

CelebA-HQ 256x256 samples.

LSUN 256x256 Church, Bedroom, and Cat samples. Notice that our models occasionally generate dataset watermarks.

LSUN 256x256 Church, Bedroom, and Cat samples. Notice that our models occasionally generate dataset watermarks.

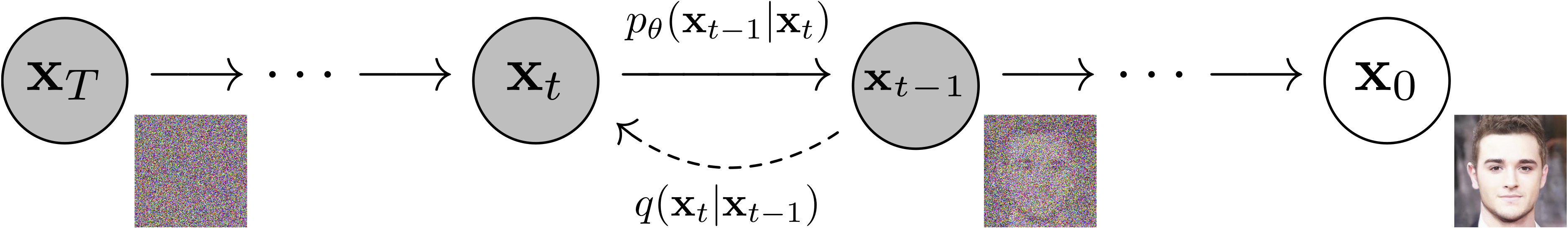

We show that diffusion probabilistic models resemble denoising score matching with Langevin dynamics sampling, yet provide log likelihoods and rate-distortion curves in one evaluation of the variational bound.

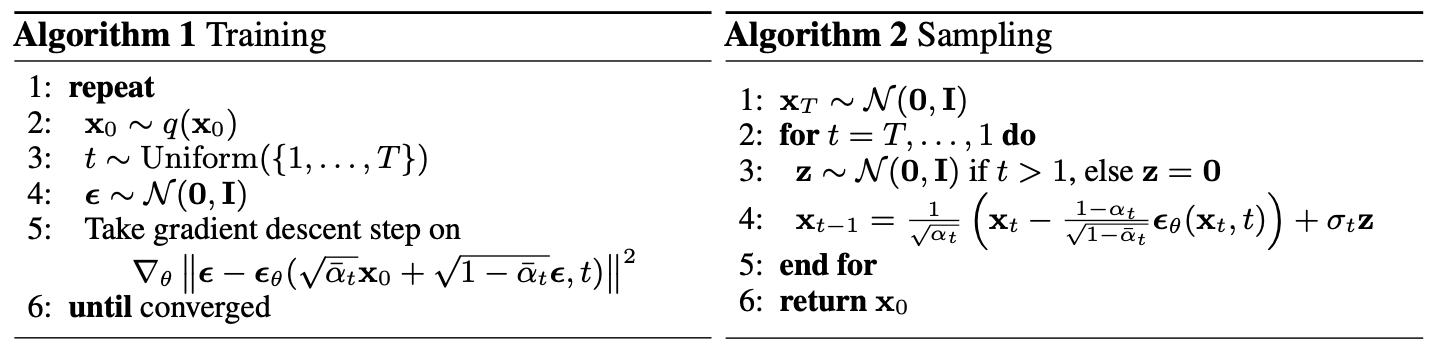

Our training and sampling algorithms for diffusion probabilistic models. Note the resemblance to denoising score matching and Langevin dynamics.

Our training and sampling algorithms for diffusion probabilistic models. Note the resemblance to denoising score matching and Langevin dynamics.

Unconditional CIFAR10 samples. Inception Score=9.46, FID=3.17.

Unconditional CIFAR10 samples. Inception Score=9.46, FID=3.17.

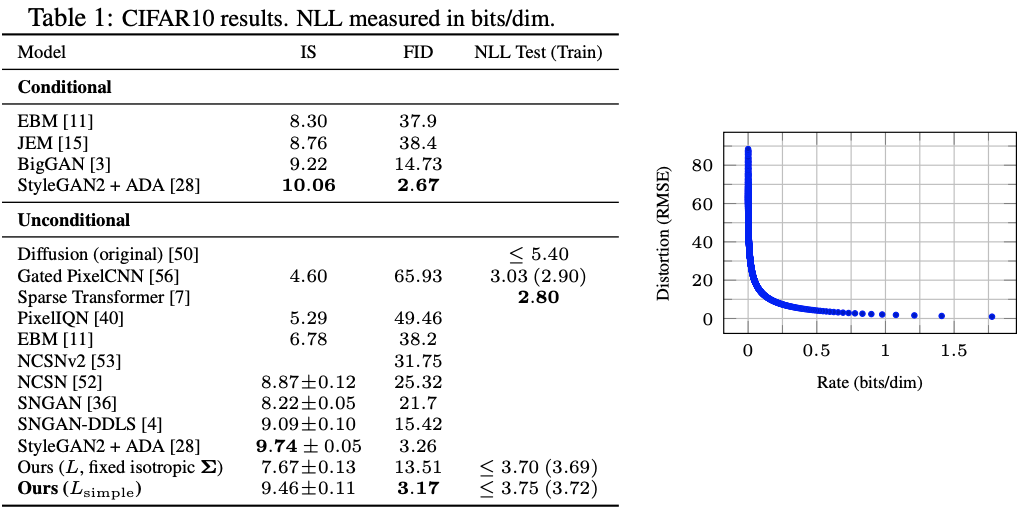

CIFAR10 sample quality and lossless compression metrics (left), unconditional test set rate-distortion curve for lossy compression (right).

CIFAR10 sample quality and lossless compression metrics (left), unconditional test set rate-distortion curve for lossy compression (right).

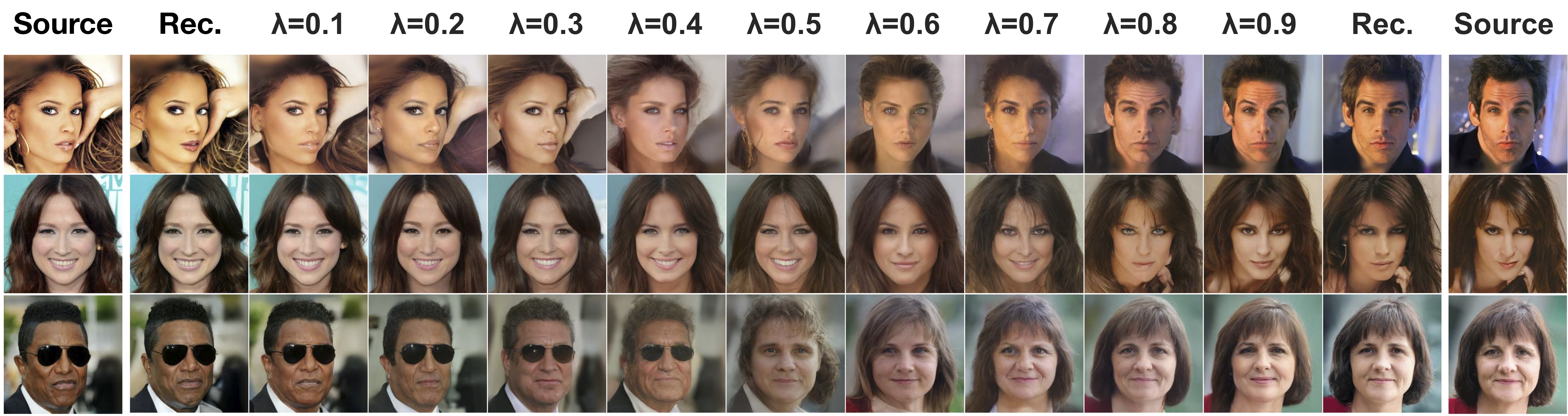

We can interpolate images in latent space, in effect removing artifacts introduced by pixel-space interpolation. Interpolations can be customized in a coarse-to-fine manner by choosing the timestep where mixing is performed. Reconstructions are high-quality.

Interpolations of CelebA-HQ 256x256 images with 500 timesteps of diffusion. Real actors are on the left and right. Others are generated by our model.

Interpolations of CelebA-HQ 256x256 images with 500 timesteps of diffusion. Real actors are on the left and right. Others are generated by our model.

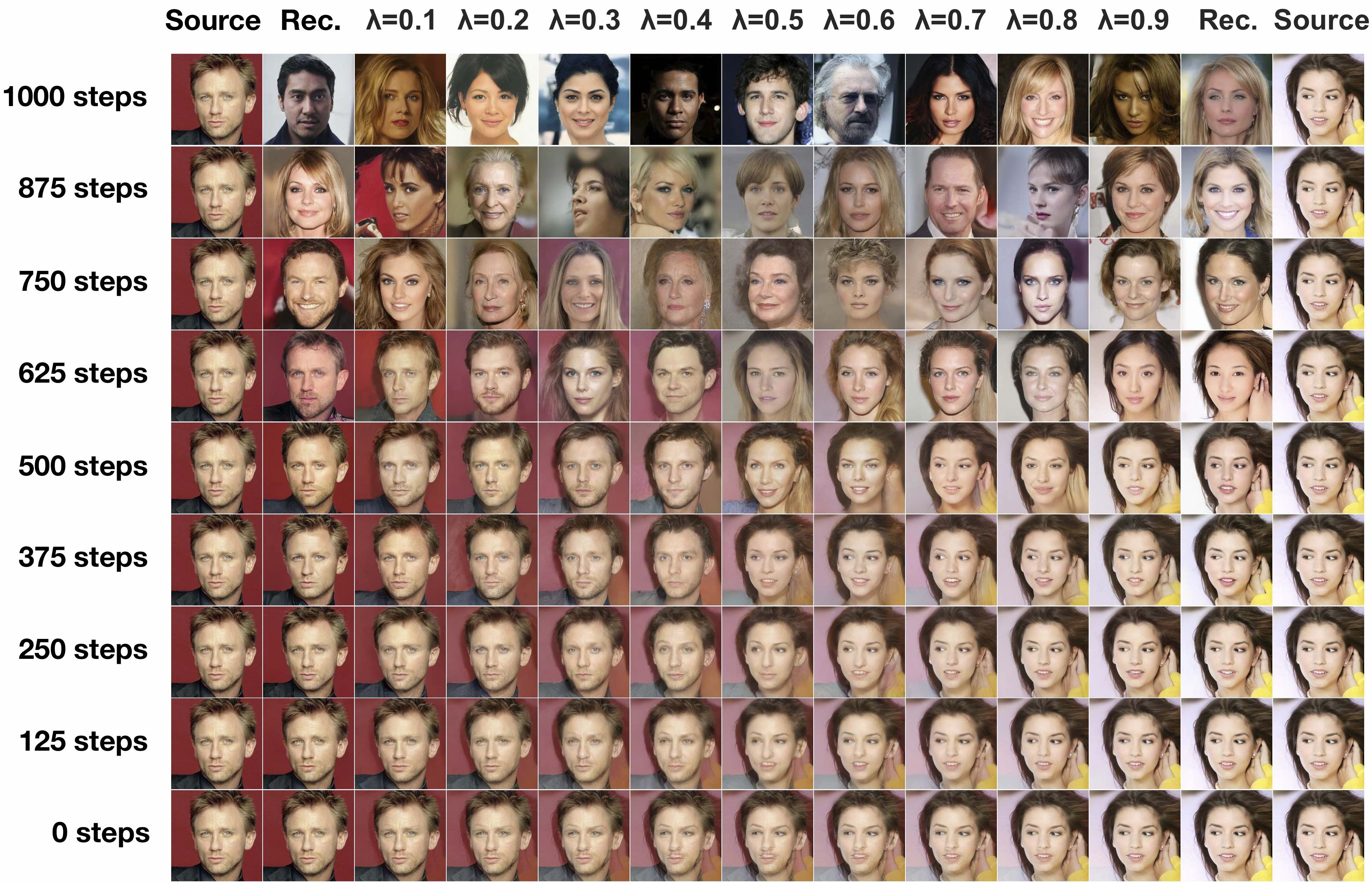

Coarse-to-fine interpolations. Lossiness can be controlled.

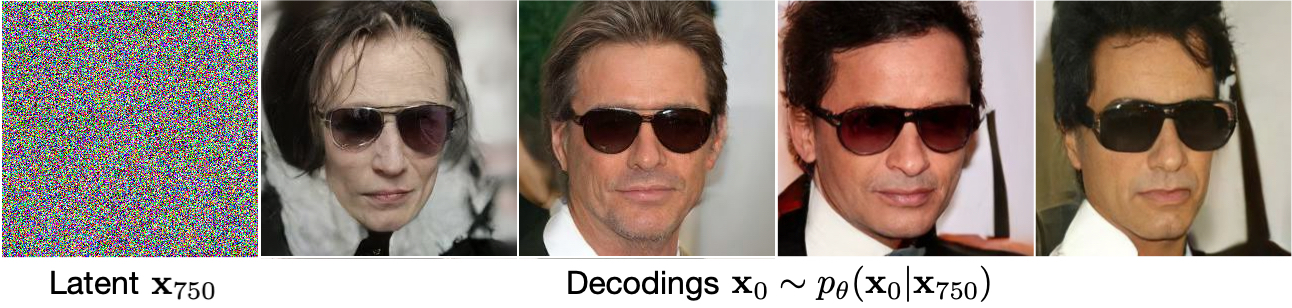

Coarse-to-fine interpolations. Lossiness can be controlled.Latent variables encode meaningful high-level attributes about samples such as pose and eyewear.

Samples generated from the same latent share high-level attributes.

Samples generated from the same latent share high-level attributes.

@article{ho2020denoising,

title={Denoising Diffusion Probabilistic Models},

author={Jonathan Ho and Ajay Jain and Pieter Abbeel},

year={2020},

journal={arXiv preprint arxiv:2006.11239}

}